Four technology companies involved in artificial intelligence (AI) development announced on Wednesday a new funding initiative aimed at supporting AI safety research.

The new initiative, called the AI Safety Fund, is supported by Google, Microsoft and AI companies Anthropic and OpenAI. More than $10 million has already been committed to support the initiative with a handful of philanthropic partners, the companies said in a joint statement.

The announcement follows the July 2023 launch of Frontier Model Forum, which the four tech companies have described as “an industry body focused on ensuring safe and responsible development of frontier AI models.” The forum identifies supporting AI safety research and working with people who shape policy as among its key objectives.

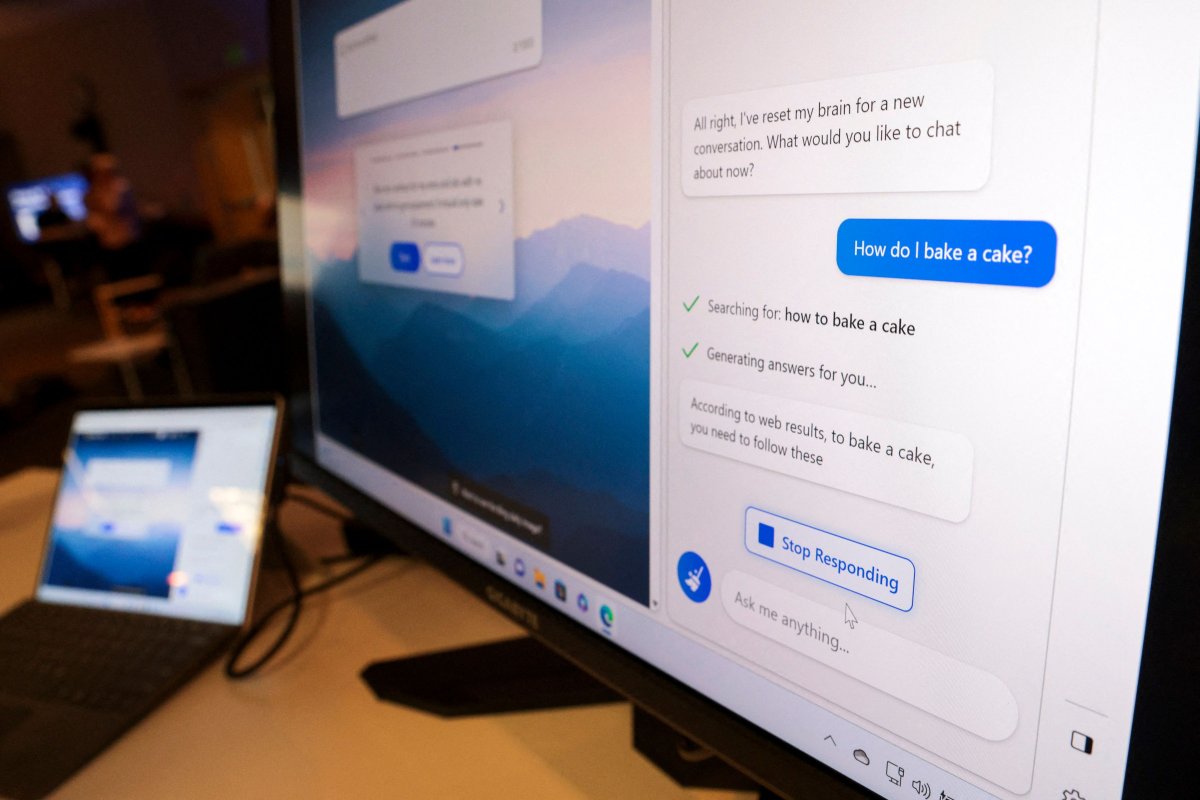

In their AI Safety Fund announcement, the four companies acknowledged the rapid pace of AI development over the last year. As several tech experts noted in an unrelated paper about AI risks published earlier this week, one of OpenAI’s early chatbots “could not reliably count to ten” as recently as 2019 but is now able to respond swiftly to textual, visual and auditory prompts. About 180 million global users were estimated to be using OpenAI’s ChatGPT about nine months after its November 2022 launch, according to Reuters.

Industry experts have repeatedly called for safety research in response to the speed of AI development. Some have suggested AI companies pause their development of new, or “frontier,” AI until precautionary guardrails can be put into place.

In the AI Safety Fund announcement, the four tech companies agreed that AI safety research “is required.” The funding initiative “will support independent researchers from around the world affiliated with academic institutions, research institutions, and startups,” with initial funding pledges coming from the four companies and four named partners: the Patrick J. McGovern Foundation, the David and Lucile Packard Foundation, Eric Schmidt and Jaan Tallinn.

“As one of the world’s largest funders of pro-social AI, the Patrick J. McGovern Foundation brings civil society into dialogue with traditional technology companies to raise awareness of new opportunities and vulnerabilities,” a foundation spokesperson said in an emailed statement shared with Newsweek. The spokesperson added that AI safety “isn’t just a technical outcome” but “a multistakeholder process to balance engineering safeguards with the interests and needs of consumers and communities.”

“The AI Safety Fund brings together a diverse collection of technologists and civil society advocates to answer a critical question: leaving aside vague questions of existential risk, how do we accelerate research to build safe, effective products that promote human welfare?” the spokesperson wrote.

The safety funding initiative is coming into being days before the world’s first global summit on AI safety, which the United Kingdom (U.K.) is hosting next week. A spokesperson for the U.K.’s Department for Science, Innovation & Technology recently told Newsweek that government officials acknowledge the rapid pace of AI progress and intend to “work closely with partners to understand emerging risks and opportunities to ensure they are properly addressed.”

JASON REDMOND/AFP via Getty Images

Uncommon Knowledge

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.