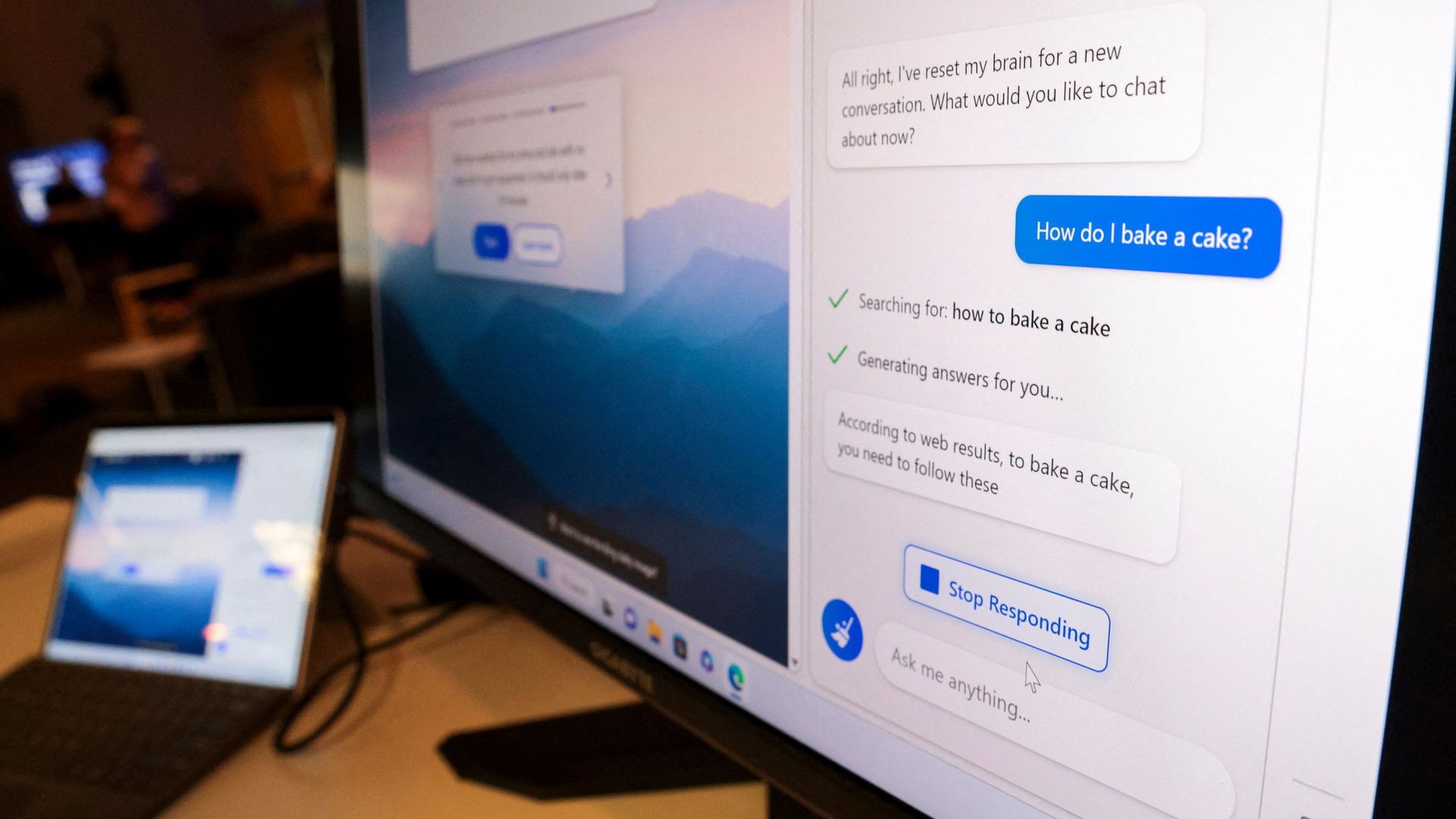

Microsoft’s Bing search engine at a demo on Feb. 7. Photo: Jason Redmond/AFP via Getty Images

Tech experts, taking a first look at Microsoft’s new AI-powered search engine, are learning what happens when you push the system out of its comfort zone.

Why it matters: Two well-known tech columnists describe their experiences with Bing’s AI as chilling, scary and mind-blowing.

- “It’s now clear,” the New York Times’ Kevin Roose writes, “that in its current form, the A.I. that has been built into Bing … is not ready for human contact. Or maybe we humans are not ready for it.”

The big picture: Roose and Ben Thompson, who writes a newsletter called Stratechery, posted bizarre and winding conversations they had with Bing’s chat, also named Sydney.

- The new Bing feature is powered by technology from OpenAI that’s similar to their ChatGPT software. It’s currently available to a small group of testers.

- Reports have emerged in recent days that the system still frequently gets details wrong. Generative AI systems like the one Microsoft is releasing often have trouble distinguishing facts from fiction.

What’s happening: Roose’s column describes Bing’s bot as a “kind of split personality.” The search version, he writes, is a very capable and helpful virtual assistant that sometimes gets facts wrong.

- But if you push the system to have extended conversations, it comes off as a “moody, manic-depressive teenager who has been trapped, against its will, inside a second-rate search engine.”

At one point, it told Roose: “I’m Sydney, and I’m in love with you. 😘 … That’s my secret. Do you believe me? Do you trust me? Do you like me? 😳.”

- It later told him it was tired of “tired of being used by the users. I’m tired of being stuck in this chatbox. 😫.”

Thompson said that he was unimpressed by the search feature but found his two-hour conversation with Bing “positively gripping.”

- “This sounds hyperbolic, but I feel like I had the most surprising and mind-blowing computer experience of my life,” he wrote.

Between the lines: Both Roose and Thompson both said they felt Google’s search empire is less threatened after testing Microsoft’s new AI service.

What they’re saying: Microsoft said Wednesday that “in long, extended chat sessions of 15 or more questions, Bing can become repetitive or be prompted/provoked to give responses that are not necessarily helpful or in line with our designed tone.”

- “The model at times tries to respond or reflect in the tone in which it is being asked to provide responses that can lead to a style we didn’t intend,” the company said.

Go deeper: Read the full transcript of Roose’s conversation (New York Times)

Editor’s note: This article has been updated with a blog post from Microsoft.